Need Hep With Kubernetes Integration?

When it comes to large-scale deployment of containerized apps in private, public, and hybrid clouds, Kubernetes has emerged as the industry standard. Many popular public cloud providers, like Microsoft Azure or Oracle Cloud provide managed Kubernetes services. A few years ago, RedHat abandoned OpenShift in favor of Kubernetes, working with the Kubernetes community to deploy the next-generation container platform.

Google engineers first developed Kubernetes before launching open source in 2014. It is a descendant of Borg, the container orchestration platform that Google uses internally. Kubernetes means “helmsman” or “pilot” in Greek, hence the “helm” in the Kubernetes logo.

Nowadays, small to medium-sized businesses significantly benefit from the Kubernetes ecosystem by employing it in the long run. They can also considerably save on infrastructure and maintenance expenditures. IntelliSoft can assist with implementing this technology.

Check out our project for ZyLAB. The customer requested that we optimize the software performance. To do this, we have the connection between services to give each one more autonomy. We have packaged the app’s features in docker containers and Kubernetes clusters to remove the need for any specific environment software.

This post will cover such topics as features of Kubernetes containers, their advantages, and use cases.

Table of Contents

What Are Kubernetes Containers?

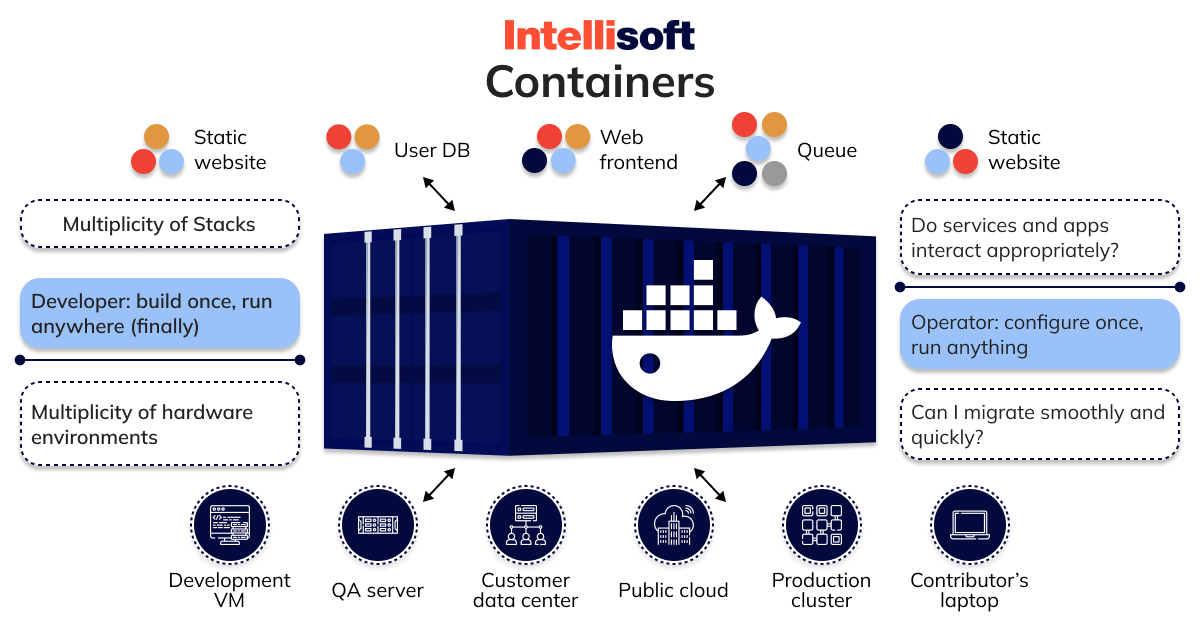

What is a Kubernetes container? With Kubernetes, what exactly is a container? Like virtual machines (VMs), each Kubernetes container has its own dedicated resources, including CPU, filesystem, process space, memory, and others. Kubernetes containers, however, are lightweight due to:

- Permissive isolation qualities, allowing them to share the Operating System (OS) among applications.

- Portable across a variety of OS distributions.

- Don’t rely on the underlying system.

Containers Kubernetes offer the same isolation, scalability, and usefulness as virtual machines. But, since they do not carry the payload of their operating system instance, they are lighter (i.e., need less space) than VMs. They let you execute more programs on fewer devices (virtual and real) with fewer instances of the operating system. Transporting containers across workstations, data centers, and cloud environments is simpler. They are excellent for Agile and DevOps development methodologies.

The Kubernetes’s main competitors include the following:

- CaaS, or container-as-a-service. It refers to container management solutions that don’t need Kubernetes’ intricate orchestration capabilities, such as Amazon Fargate and Azure Container Instances.

- PaaS, or platform-as-a-service. Many companies, such as OpenShift Container Platform and Rancher, provide full-featured cloud computing platforms based around Kubernetes but are easier to use and have additional features like security and networking already implemented.

- Lightweight container orchestrators. Kubernetes is the most common, but there are others. Docker Swarm and Nomad, two mature orchestrators, are easier to use and manage than Kubernetes.

- Managed Kubernetes services. Services like GKE and EKS enable you to run hosted, managed clusters. These services simplify Kubernetes deployment, upgrade, and maintenance, but they still require knowledge.

How Does a Kubernetes Container Work?

Each container’s layer is used to store a different kind of data. For instance, an application’s data may be stored in one layer, while the application’s settings could be stored in a different layer. The layers to be used and their order of combination are specified when a developer creates a container.

In the computing environment, many levels start from the bottom level, which means that the bottom level will be level 1, which is the container’s infrastructure, followed by level 2 with the host operating system, level 3 having a platform for the container, and other levels at the top. A container can start its compilation from the bottom image. A container image containing a test application has been created and run on the container’s platform.

Once a container is made, it can be started up and used like any other program or system. You can also put containers into groups and manage them as a whole. This makes it easy to deploy and manage applications with several parts. For example, you could have a group of containers that handle different parts of an e-commerce website, like the front-end, back-end, database, and cache.

Is Kubernetes Better Than Its Alternatives?

With Kubernetes, one may design a cutting-edge, efficient, and effective solution. The platform’s many features and functions allow you to streamline and automate your DevOps operations.

Kubernetes services provide access to tools that make managing containers across several hosts easier by distributing their responsibilities. With these features, you may design a scalable platform.

Amazon Elastic Container Service (ECS), Docker Swarm, Nomad, and Red Hat OpenShift are some of the most well-known alternatives to Kubernetes. Each of them has its own quirks, but they all appear to have the essentials covered. Let’s examine these Kubernetes substitutes one by one to weigh their advantages and disadvantages.

Kubernetes engineers rose 67% to 3.9 million, according to studies. 31% of all back-end developers are in this number, growing by 4% annually.

Kubernetes Features

Kubernetes is a crucial component of contemporary cloud architecture because it offers a rich collection of tools and features:

- Container management

Workloads in containerized environments run smoothly with Kubernetes. The platform also makes it easy to move your projects to the cloud. - Scalability and availability

When your application provides several services, scaling up or down becomes a challenge. Kubernetes gives you the ability to autonomously scale container sizes according to your requirements. - Service discovery

The platform allows you to monitor individual applications and the entire cluster simultaneously. K8s (a.k.a. Kubernetes) has a web interface. The system provides all data about the operation of the services to the developer in an intuitive way. - Flexibility and modularization

The flexibility and scalability built into Kubernetes are two of the main reasons why it is becoming increasingly popular for use in cloud deployments. Kubernetes’s many features make it possible to autonomously scale containerized apps and services and to dynamically manage their associated resources. - Self-recovery

This Kubernetes feature does superhuman feats. Once a container fails for whatever reason, it restarts immediately. The containers inside each node are spread if one of them fails. If unresponsive containers do not respond to user-defined health tests, Kubernetes promptly pauses them and restricts traffic until they become responsive. - Logging and monitoring

Monitoring Kubernetes clusters lets you see issues and handle them. Monitoring Kubernetes cluster uptime, resource consumption (such as memory, CPU, and storage), and component interaction makes managing containerized workloads easier.

Cluster administrators and end users may identify problems including insufficient resources, failures, failed pod launches, and disconnected nodes with the help of Kubernetes monitoring. Several companies now use cloud-native monitoring tools to keep tabs on cluster performance.

- DevSecOps maintenance

DevSecOps is a modern security approach that automates container operations across clouds, integrates security across the container lifecycle, and enables teams to develop safe, high-quality software rapidly. - Persistent storage

It is the ability to mount and dynamically add storage. Using Kubernetes, developers can mount any storage system they want, such as local storage, public cloud providers, and more. The underlying storage system must still be provided.

Kubernetes gives users and managers an API that keeps the details of how storage is delivered separately from how to use it. - Secrets management

Kubernetes conceals information in two ways: with built-in features and with user-created ones. Kubernetes service accounts automatically generate built-in secrets and add them to containers with API credentials. They can be turned off or bypassed if necessary.

You may store your private information in a secret that you specify and construct with the help of customized secrets. Kubernetes Secrets offer better security and adaptability than alternatives like deploying code straight to a Pod or building a Docker image.

When Use a Kubernetes Container

Kubernetes is more than just an orchestration system. Technically, orchestration is about doing a specific workflow: first, do A, then B, then C.Now we don’t care about the route from A to C, which eliminates centralized control.

Kubernetes, on the other hand, eliminates the immediate need for this. It has management processes that are, in fact, independent and componentized. The main task of the control processes is to translate the current state to the desired state.

Because of this, the system is now easier to use, robust, reliable, sustainable, and extensible.

Containers allow developers to divide applications into smaller parts with a clear separation of tasks. The level of abstraction provided for an individual container image allows us to understand how distributed applications are built. This modular approach enables faster development with more minor, focused groups responsible for specific containers. It also allows us to isolate dependencies and use smaller components more.

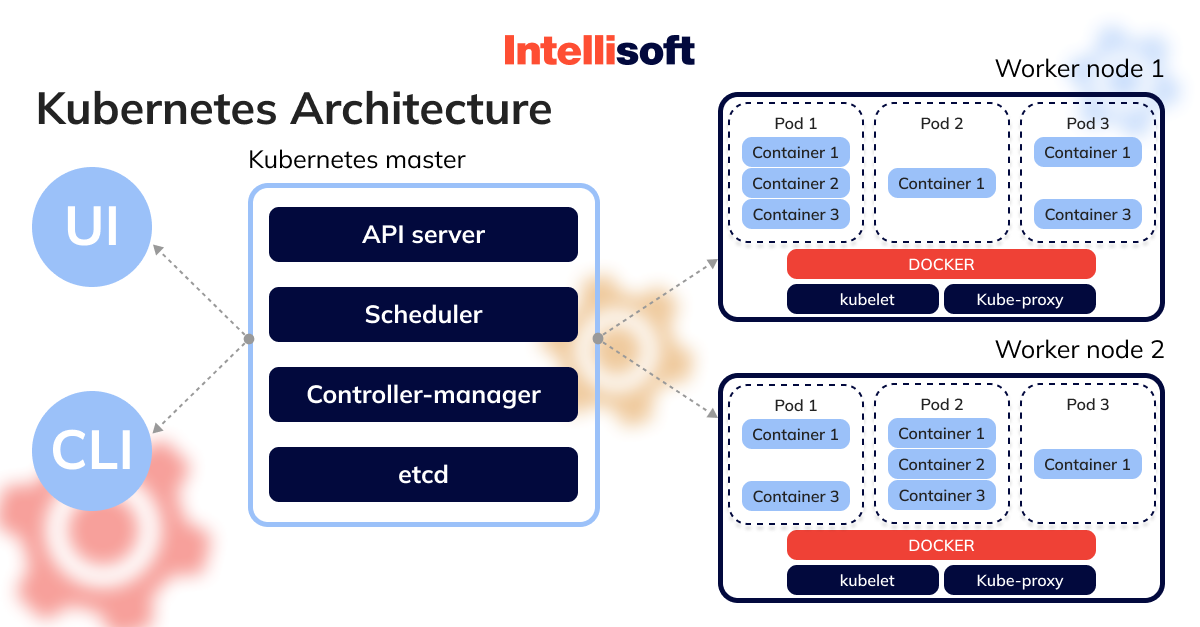

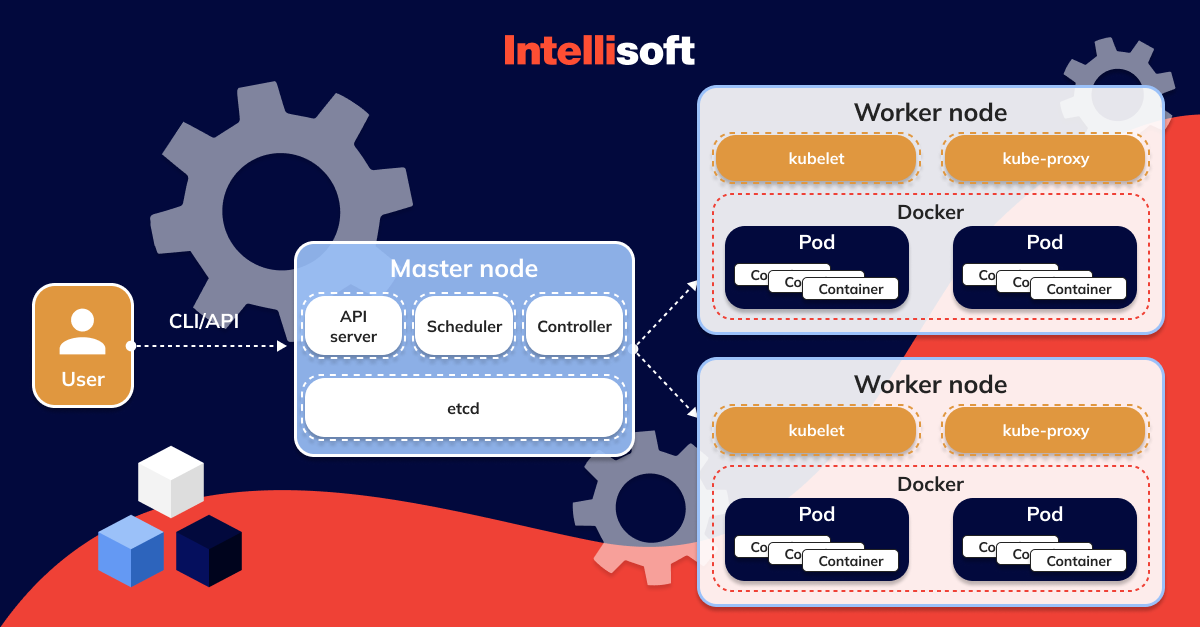

You can’t do this with containers alone. In Kubernetes, on the other hand, you can achieve this with Pods, which are groups of one or more containers with shared storage/network resources and a specification on how to run the containers. If you wonder about the pods vs containers, know that a container runs logically in a Pod while a group of Pods, related or unrelated, run on a cluster.

Kubernetes is mainly used by site reliability engineering (SRE) experts and those who deal with DevOps efficiency and application bundling. For example, developers have written code and built a program binary. After that, someone has to prepare the images in which to put the application and create a pipelining.

Looking For a Dedicated Development Team?

Imagine an application that has to run on a distributed system of dozens or even hundreds of servers. Let’s take Docker or any other tool to work with a container inside the system. You can read about Kubernetes and Docker comparison in our previous post. Each server will have to be configured and administered separately: we cannot apply uniform configurations for the whole group at once – it will be a semi-hands-on job. Even if we write a set of scripts for automation, they will not be a universal solution – in any other case, we will have to write them again.

That’s where Kubernetes steps in. We describe the necessary infrastructure characteristics (application resources, network design, and so on), and Kubernetes executes the instructions and manages the servers connected to it. This way it saves time for administrators and developers.

Kubernetes Use Cases

Below we gathered key examples of how businesses leverage Kubernetes, so that you can understand whether this technology suits your project’s needs or not.

- Airbnb

Airbnb is a fascinating example of Kubernetes usage. Airbnb used the platform to update its monolithic applications, streamlining the work of 1,000 software engineers and enabling over 500 implementations every day on more than 250 essential containerized apps. - Shopify

In addition, Shopify is moving all of its apps to the Google Cloud Platform, which is also a big migration operation. As developers redesigned the program utilizing Docker’s container-based architecture, it was subsequently coordinated using Kubernetes. - OpenAI

OpenAI is a research lab for artificial intelligence that requires scalable, cloud-based deep learning infrastructure to conduct its experiments. The key factors were portability, quickness, and affordability.

OpenAI first deployed Kubernetes on AWS but switched to Azure in early 2017. Key experiments for OpenAI in sectors like robotics and gaming on Azure or in-house data centers, depending on which cluster has available resources. “We use Kubernetes largely as a batch scheduling system and rely on our auto scaler to dynamically scale up and down our cluster,” explains Christopher Berner, Head of Infrastructure. “This fact enables us to provide low latency and quick iteration while drastically lowering the expenses associated with unused nodes.” - Tinder

Tinder is one of the finest instances of reducing time to market. The technical team at Tinder has problems with scale and stability because of the enormous volume of traffic. They concluded that Kubernetes was the solution to their problem.

The technical team at Tinder moved 200 services and managed a cluster of 1,000 nodes, 15,000 pods, and 48,000 active containers using Kubernetes. Even though the conversion procedure wasn’t simple, the Kubernetes solution was essential to future-proofing seamless company operations.

When Not to Use Kubernetes

It might be challenging to appreciate the total value of containers and an orchestration tool if your application has a monolithic design. This is because containers are designed to divide your program into distinct components. Yet, the fundamental definition of a monolithic architecture is for all of the parts of the application (including IO, data processing, and rendering) to be interconnected.

With Kubernetes’ notoriously high learning curve, you may expect to spend a lot of time training staff, dealing with teething problems associated with a new solution, etc. It’s probably not the best option if your team isn’t eager to try new things and take calculated risks.

Advantages of Kubernetes Containers

Let’s take a look at the main Kubernetes advantages:

Kubernetes Automates Containerized Environments

Containerization has numerous advantages. Containers are smaller, faster, and more portable than traditional virtual machines (VMs) because they do not require a complete operating system and instead operate on a shared kernel.

Containers are the preferred option for businesses employing microservices architecture. Kubernetes facilitates containerized environments as the orchestration system. Kubernetes automates the requirements for operating containerized workloads.

Kubernetes Provides Native Tooling

Engineers passionate about Kubernetes spend their time building open-source tools and tools made by third parties. Even though Kubernetes has many benefits, legacy tools can be hard to use and sometimes need fixing.

The open-source community has come together to support popular tools like Prometheus, often used to collect and track metrics. There are also many native third-party solutions for monitoring, logging, cost management, load testing, and security, among other things.

Engineering teams can save time and get more done when they use their own tools.

Kubernetes Stands for Multiple Cloud Opportunities

Kubernetes workloads can remain in a single cloud or be dispersed over several clouds due to mobility. At IntelliSoft , we can effectively expand a client’s environments from one managed Kubernetes provider to another while running workloads on various managed Kubernetes providers.

How Much Will Your Project Cost?

Kubernetes-specific products are currently available from the majority of the major cloud providers. For instance, Google’s GCP offers GKE, Amazon’s AWS offers EKS, and Microsoft’s Azure offers AKS.

All enterprises may benefit from multi-cloud setups and avoid vendor lock-in thanks to Kubernetes.

Kubernetes Is About Cost Efficiencies and Savings

The potential for financial savings and efficiency was an early and prominent motivation for adopting Kubernetes. Kubernetes has helped businesses of all sizes save money while having various degrees of demand and scalability problems.

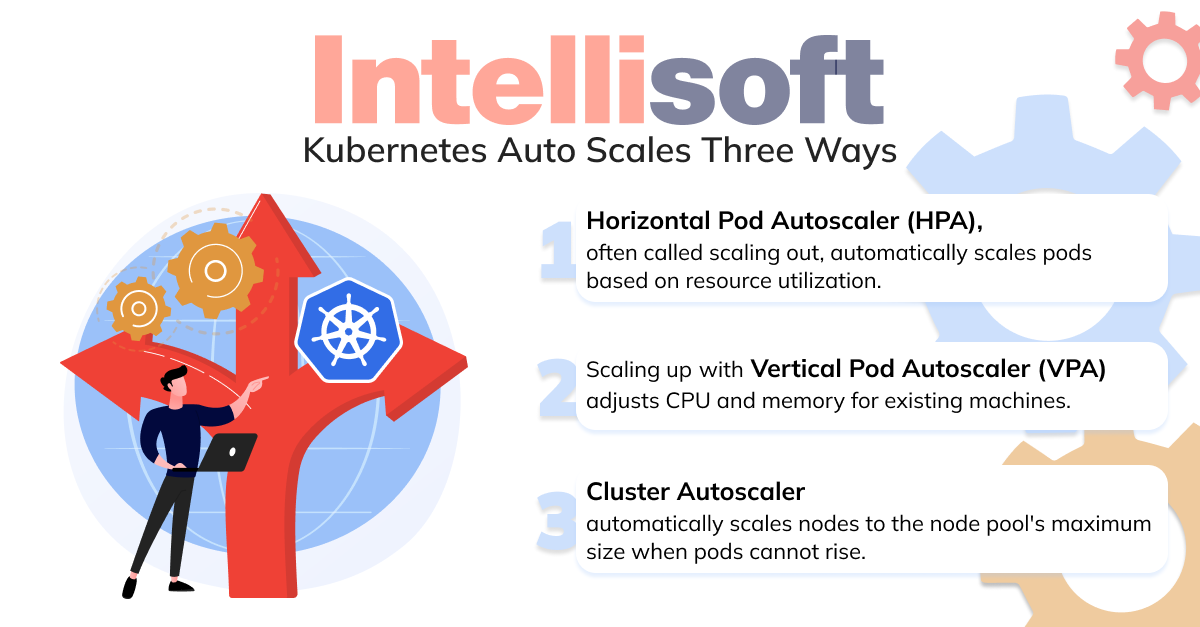

As previously noted, Kubernetes’ autoscaling features allow businesses to increase or decrease the number of resources they employ dynamically. When combined with a cloud service that can scale as needed, Kubernetes can use available resources optimistically.

Kubernetes can adjust the number of pods and nodes to match the demand and assure user performance without wasting resources if, for instance, you run a video streaming service and have spikes in viewing at specific times of the night.

Kubernetes Is Supported by Strong Open-Source Communities

Kubernetes is a community-led project and a fully open-source tool. At one point, it was the fastest-growing open-source software ever, so there is a vast ecosystem of other open-source tools to work with it. Strong support for the platform means that Kubernetes will continue to get new features and be improved. This support protects investments in the platform and keeps people from being stuck with technology that will soon become obsolete. It is also supported and portable by IBM, AWS, Google Cloud, Microsoft Azure, and other public cloud providers.

People often think that Kubernetes services compete directly with Docker, but that’s false. Docker is a tool for putting things in containers, and Kubernetes is a platform for managing containers often used to manage multiple Docker clusters.

Kubernetes Minimizes Downtime through Self-Healing

Kubernetes is pre-packaged with a self-healing system that continuously monitors the operating state of containers and the capacity of those containers to respond to requests from clients. It may take up to five minutes to determine whether or not there is a problem.

Kubernetes Offers Deployment and Scaling Automation

Kubernetes is declarative, i.e., it allows you to describe the desired state of your deployments. This fact helps you optimize, simplify and automate your deployment process.

Automatic deployment updates the deployment without downtime. Being a declarative system, Kubernetes will change the actual state as desired. For deployments, you can use the Kubernetes rollback feature.

Kubernetes Helps to Scale Up and Down

Kubernetes is famous for autoscaling. Kubernetes helps enterprises scale up and down based on demand.

Cons of Kubernetes Containers

Steep Learning Curve

The most common systemic argument against using Kubernetes is that running is too hard. To begin with, the learning curve is very steep. In addition, a considerable amount of content covers different topics, often leaving developers stumped about where to start learning Kubernetes.

Expensive Talent

The costs associated with Kubernetes for corporations can reach one million dollars or more per month, while the prices for big and medium-sized companies can range from thousands to hundreds of dollars each month. Even for startups, the monthly cost of running Kubernetes may reach $10,000.

Let’s say you choose to use vanilla Kubernetes, which is free, instead of managed services. Yet that doesn’t mean that using it is inexpensive. Because of the orchestrator’s complexity and challenging learning curve, you must recruit skilled Kubernetes engineers at a high cost or spend heavily on their training. In addition, the cost of cloud computing may vary and rise suddenly.

Cost-monitoring technologies may reduce Kubernetes expenditure by up to 40% for most enterprises. Developers love these capabilities because they speed up product launches and prevent performance issues. If you don’t monitor your Kubernetes cluster, it might waste resources and cause unanticipated cost increases.

Migrating Companies or Applications

Moving a company or enterprise to Kubernetes may take a lot of work, time, and resources. Even with help from experts, it would take time for team members to get used to the new workflow. On the bright side, they may already know about Kubernetes distributions and forks, which makes the change easier.

Expertise Needed from the Beginning

Problem-solving, bug-fixing, and integrating with new apps are all challenging stages of development. There are more complex debugging tools than there are in other programming languages. As a result, you need experts to aid in training or problem-solving. Furthermore, it isn’t easy to remain up-to-date due to continuous integration, expansion, and innovation.

Unnecessary Complexity for Small-Scale Apps

Developing apps that can scale up and down to accommodate varying traffic levels sounds fantastic. Yet, putting this concept into practice may not be that straightforward for applications of a more modest scale. When constructed, many apps are designed with either a limited number of users or a limited budget in mind.

Because the advantages of running your application on Kubernetes might not be able to pay for the amount of work and resources that went into putting it up, using Kubernetes for these might be an unnecessary waste of time and effort.

Related readings:

- What Are the Security Risks of Cloud Computing? Threats & Solutions

- The Great Cloud Storage Debate: ownCloud vs Nextcloud – Which One Is Right for You?

- Monolithic vs Microservices Architecture: Pros and Cons

- Complete Guide to Cloud App Development: Key Steps & Costs

- Docker and Microservices: The Future of Scalable and Resilient Application Development

Does Kubernetes Make Sense for Your Business? What IntelliSoft Can Do

The question “Should you transition to Kubernetes?” defies a straightforward response since the answer to the question depends heavily on the specifics of the given scenario.

Kubernetes is a great place to start whether you want to launch a new project or create a scalable app. Kubernetes is a superb option if you want to design something that can scale in the future since it is very flexible, robust, scalable, and secure.

The software team now has another task: creating and maintaining an application based on this technology. You might want to pause and do some situational analysis if this obligation seems more extensive than the entire program. But, you could excessively useKubernetes if you’re attempting to construct something that won’t experience a significant user spike in the future.

Kubernetes will offer a better developer experience, increased productivity, and an engaged staff. If done incorrectly, the DevOps team will have to spend their days and nights administering the technology, which might be hellish.

In conclusion, we want to stress the importance of conducting timely research and gaining an awareness of the topic at hand to guarantee that you select the most appropriate tool for your company’s needs and its applications.

Go through our blog to get more in-depth articles about web development. If you’re looking for a time-tested solution to optimize the performance of your application, turn to IntelliSoft so that we can hire the best team of developers for you.

AboutKosta Mitrofanskiy

I have 25 years of hands-on experience in the IT and software development industry. During this period, I helped 50+ companies to gain a technological edge across different industries. I can help you with dedicated teams, hiring stand-alone developers, developing a product design and MVP for your healthcare, logistics, or IoT projects. If you have questions concerning our cooperation or need an NDA to sign, contact info@intellisoftware.net.