What are Kubernetes clusters? They are like super-efficient managers for containerized apps, optimizing how they run across various environments—whether that’s on physical servers, virtual machines, or in the cloud. By managing these containers, K8s makes sure your applications are not only agile but also robust and scalable, effortlessly handling the ups and downs of demand.

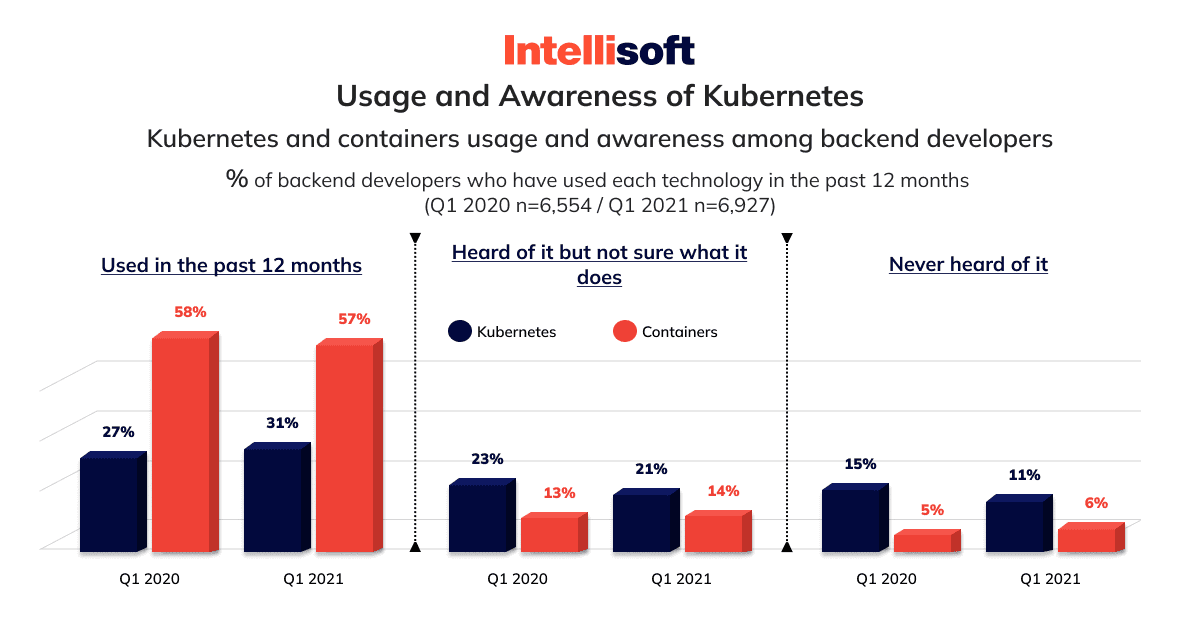

Despite the Kubernetes growth in recent years, it remains a hot topic among tech circles, especially for those looking to refine their application delivery game. According to the Kubernetes statistics in RedHat’s 2021 State of Open Source report, a whopping 85% of IT leaders say this technology is essential for cloud-native application strategies.

IntelliSoft’s team used Kubernetes in the ZyLab project because the client wanted us to make his project operate more effectively. To achieve this goal, we are changing the relationship between services to make them more independent.

The IntelliSoft team implemented Proof-of-Concept (POC) for containerized processing node service and continues to work on containerizing other parts of the product.

So, why not peel back the layers of this tech marvel? Let’s explore how clusters work and discover the tools that can help you secure and streamline your container management. Moreover, we’ll review some Kubernetes use cases.

Table of Contents

What is K8S? What Problem Does Kubernetes Solve?

What are Kubernetes? This technology has introduced a small revolution in software development in this way. Although Kubernetes’ initial release took place in 2015, its roots can be found in a project called Borg that was started in 2003 by Google employees. The main issue that gave rise to this project was the requirement to manage massive infrastructure hardware while maintaining free services without impairing business revenue. The draw of talented engineers to Google prompted a re-evaluation of how to maximize the performance of commodity hardware, which gave rise to Borg.

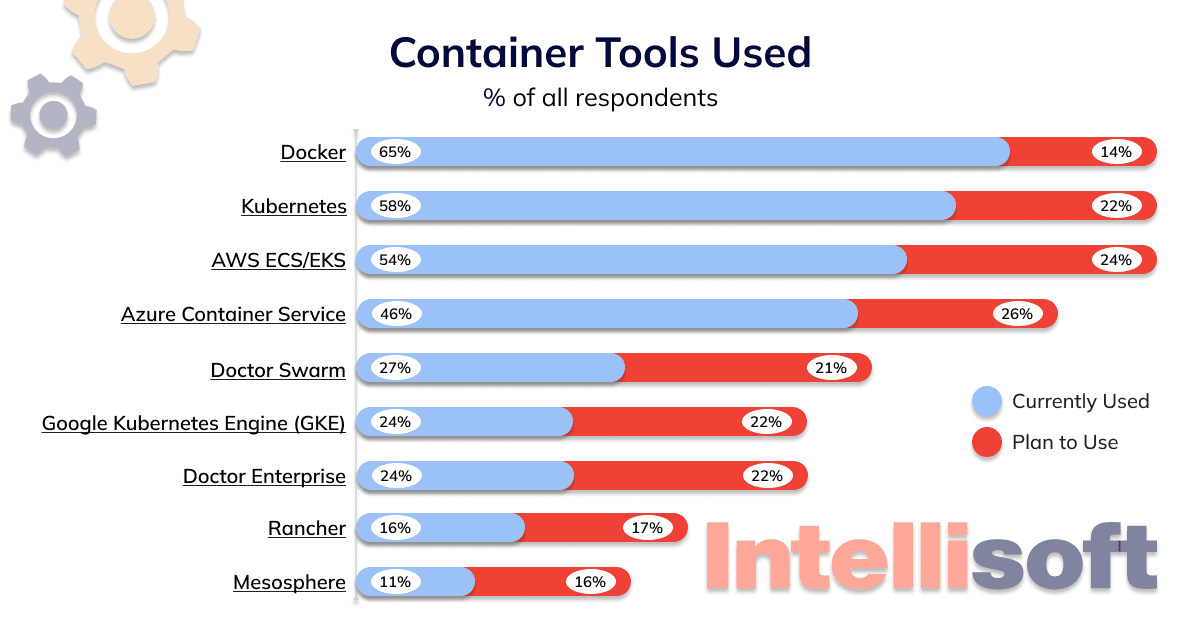

Because of an exponential increase in cloud-native technologies’ adoption, Kubernetes container has become the default standard for container orchestration. Gartner says Kubernetes adoption rate ~30% will continue to grow in 2022 and surpass 75%. Kubernetes operates at the container level rather than the hardware level, handling scalability requirements, failover, deployment patterns, load balancing, logging, and monitoring. It is similar to PaaS services.

In a variety of production scenarios, administrators may easily deploy, manage, and scale containerized programs using Kubernetes. It shields the application from the underlying host architecture. This allows detached apps to run as a single application across many containers. The tool manages all phases of a container app’s lifetime. Google originally created it to control massive container programs in working contexts. Despite giving Kubernetes to the Cloud Native Computing Foundation (CNCF) in 2014, Google is still actively working to advance the technology.

The Go programming language was used to create Kubernetes as a service. It supports a wide range of cloud deployment strategies and platforms. What are Kubernetes use cases? Kubernetes helps businesses manage IT workloads effectively by grouping software into a container cluster that runs on a virtualized host OS. Using a Master/Worker architecture, worker nodes that run container workloads via an API Server are managed and controlled by a master node.

Understanding Kubernetes use cases and container orchestration is crucial before getting into the reasons to utilize Kubernetes.

What Is a Container Orchestration System?

Such systems, like orchestra conductors, organize the arrangement and coordinate the relationship of instruments in one project, distribute tasks between them, and control their execution. This is why they are called orchestration systems or container orchestrators. Orchestrators are useful for managing microservice applications because each microservice in the cluster has its own data and model. It is autonomous from the development and deployment point of view and, therefore, is most often placed in a separate container. With a large number of services, the number of containers increases, and they all need to be managed.

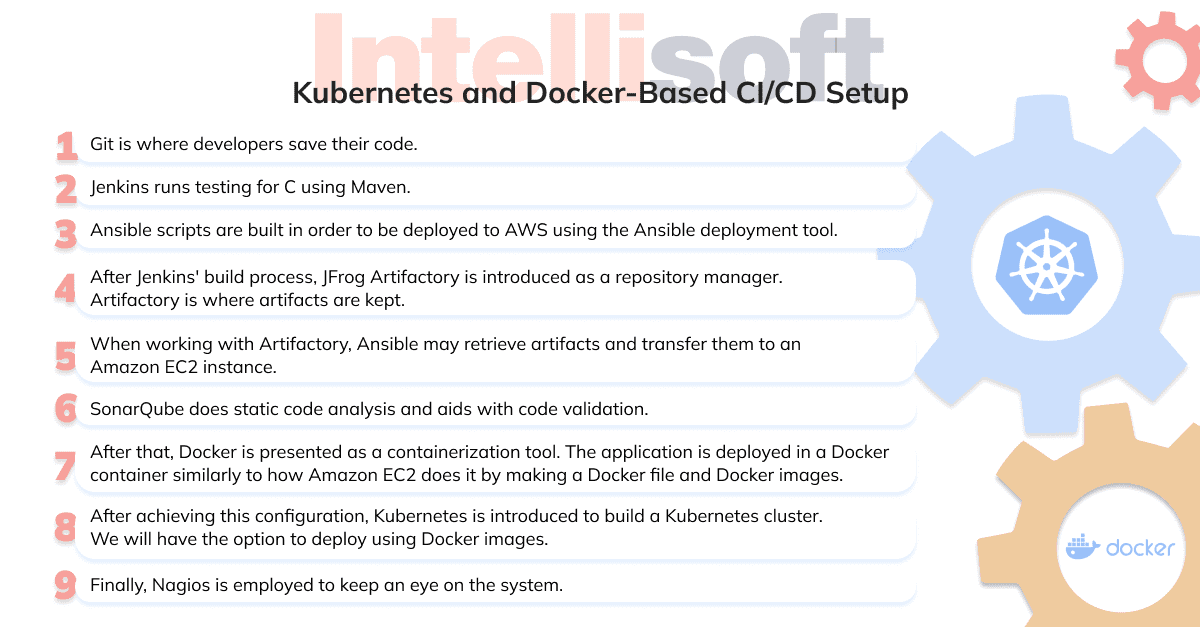

That is, orchestrator programs manage the life cycles of microservice application containers. Since everything in application development is interconnected, orchestration systems also help automate various production processes, so DevOps teams integrate them into continuous integration/continuous delivery (CI/CD). Let’s see what tasks orchestrators take on and Kubernetes uses.

Step 1. Infrastructure preparation and deployment

This is the installation of an application and its dependencies on a server, including preparing that server – installing libraries, services, and so on.

Step 2. Resource allocation

Deploying an application in a production cluster requires the allocation of server computing resources for the various processes: these are the amounts of memory (RAM) and central processing unit (CPU).

Requested and limit parameters are set, rules are set when containers reach resource limits, and various limits are set too. This is important to keep the application up and running and to keep costs to a minimum.

Step 3. Load balancing

Automatically analyzes and distributes workloads to the entire server and containers in particular. Balancing optimizes resource utilization, increases bandwidth, minimizes response time, and helps avoid overloading individual resources.

Step 4. Configuration and scheduling

This is part of the server preparation, namely its configuration with the help of special scheduling programs. They are used not only for microservice applications but also for monolithic ones. Configuration management tools usually use “facts” to make sure the server data is valid (e.g., “make sure /etc/my.cnf contains this…” or “NGinX should work with the following configuration files”). Orchestrators contain such schedulers, so you don’t need to put them separately.

Step 5. Scaling containers based on workloads

Idle resources lead to costs, and the lack of resources leads to unstable application performance. Workload-based scaling, which can be manual or automated, allows you to regulate the amount of resources used.

Step 6. Container status monitoring

This feature allows you to see which containers and their images are running and where, which commands are being used in containers, as well as which containers are consuming too many resources and why.

Automatic error log auditing allows you to troubleshoot problems in time.

Step 7. Ensuring secure communication between containers

Continuous assessment of clusters, nodes, and the container registry provides data on misconfigurations and other vulnerabilities that may pose a threat and recommendations to address identified threats.

Step 8. Traffic routing

For the application to be accessible from the Internet, it is necessary to configure the correct distribution of traffic from the external network to containers and the necessary services.

Related readings:

- DevSecOps: Defined, Explained, and Observed in One Article

- A Complete Guide to Cloud App Development: Key Steps & Costs

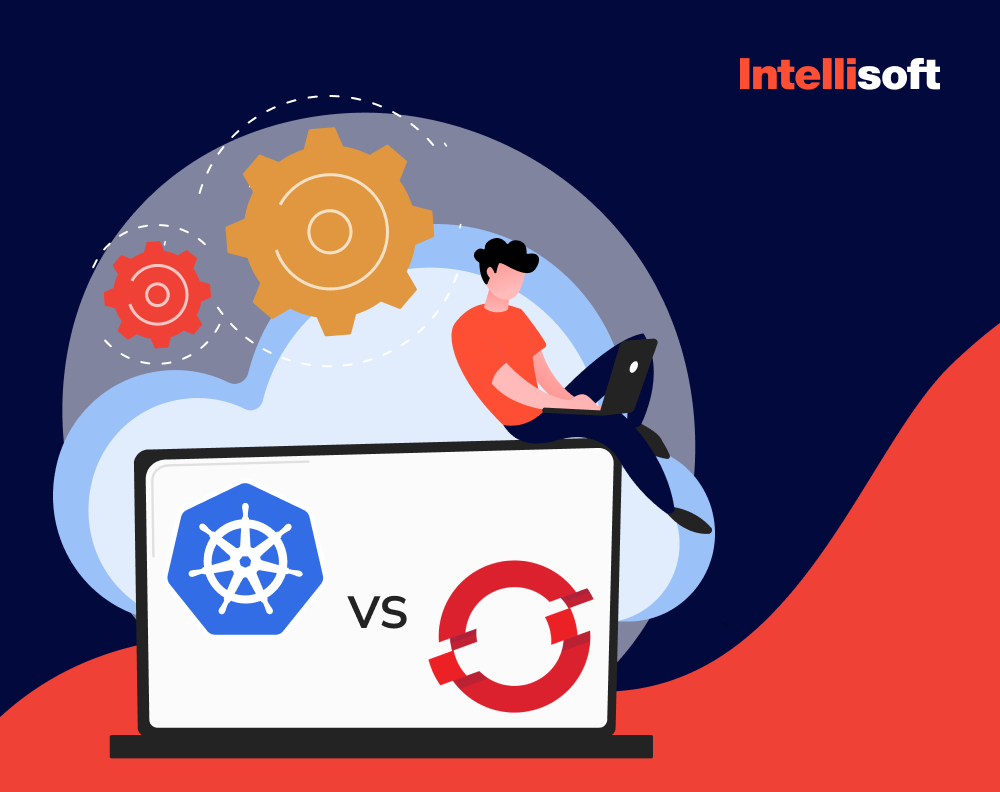

Kubernetes vs Docker: What Is the Difference?

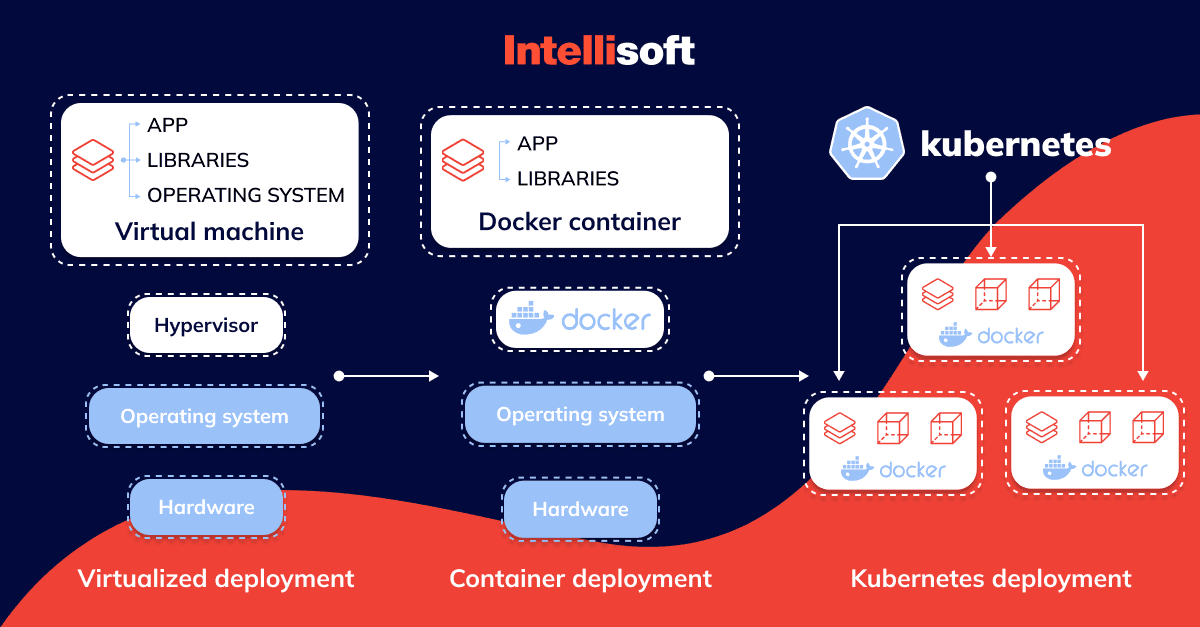

What are Docker and Kubernetes? First of all, let’s look at what the second tool is. Docker is not one of the Kubernetes alternatives. It is an open platform for creating, deploying, and maintaining applications packaged in containers. They are self-sufficient units that can run in the cloud or on-premises environments.

Docker is designed to create and run containers on an arbitrary machine and then transfer their images to other environments using the container registry. The containerization approach of Kubernetes adoption makes it possible to run applications on the operating system (OS) so that they remain in an isolated part of the system. The application will think it has a personal dedicated OS. As a result, the platform allows multiple applications to run on a common operating system as if each had its own OS.

Each application is inside its own container. The platform makes it possible to create, manage applications during development, work with storage, memory, and application permissions.

Docker features include:

- Fast, easy setup.

- High performance.

- Isolation by containerizing applications.

- Ability to manage security.

Together, Docker and Kubernetes were developed by experts to be used simultaneously. The two don’t compete with one another because they each serve a different purpose in DevOps and are frequently used in tandem. In light of it, consider the variations:

- Your application is isolated in containers using Docker. Your application is packaged and shipped using it. Kubernetes, in comparison, is a container scheduler. Its main function is to scale and deploy your application.

- Another significant distinction between Kubernetes and Docker is that the former was created to operate in a cluster, whereas the latter was created to operate on a single node.

- Another distinction between Kubernetes and Docker is the need for a container runtime environment for Kubernetes orchestration, whereas Docker can be used independently of Kubernetes.

The industry standard for managing and orchestrating containers is currently Kubernetes. It provides an infrastructure layer structure for managing user or developer interactions with containers as well as for orchestrating containers at scale. In a similar vein, Docker is currently the industry standard for developing and deploying containers.

Docker vs Kubernetes: Logging & Monitoring

Although Kubernetes and Docker are two distinct technologies, they complement one another well and function effectively as a team. Although Docker can be run on a Kubernetes cluster, this does not imply that Kubernetes is better than Docker. A well-integrated platform for deploying, managing, and orchestrating containers at any scale will be made possible by combining the two systems.

What Are the Benefits of Kubernetes?

One of the main Kubernetes benefits is the convenience of this platform for application developers. The development environment uses the same containers developed and examined in the test environment.

In addition, when working with Kubernetes, there is no need to worry about how the new application will work with the OS drivers because Kubernetes containers provide exactly this compatibility. This means that all the information needed for the application to work correctly is present in the Kubernetes environment, where it stores data and keeps track of compliance. Kubernetes allows developers to manage software releases at the click of a button. If any difficulties arise, it’s possible to go straight back to a previous version or install new ones incrementally, monitoring how the system works.

Kubernetes addresses most of the operational needs of application containers. Here are some of the main reasons why Kubernetes has gained such popularity:

- The world’s largest open-source project.

- An expert tool for monitoring container health.

- Auto-scaling feature included.

- Huge community support.

- High availability through cluster consolidation.

- Excellent container deployment.

- Efficient persistent storage.

- Support for multiple clouds (hybrid cloud).

- Compute resource management.

- Real-world Kubernetes use cases available.

What are Kubernetes additional advantages? By fully utilizing immutable infrastructure and container technologies, apps can be scaled on-demand while drastically optimizing resources. DevOps teams like Kubernetes because it was created with operations in mind. Developers choose it over other PaaS providers because of its lax prescriptiveness. They may easily package programs using its adaptive service discovery and integration capability.

Features of Kubernetes

Many cloud services offer a Kubernetes-based platform, or Kubernetes infrastructure as a service, on which Kubernetes can be deployed as a service. The question is what the main features of this platform are.

Service monitoring and load balancing

Kubernetes uses a DNS name or IP address to discover a container. Kubernetes can also load balance and distribute network traffic to stabilize deployments.

Automatic deployment and rollbacks

Using Kubernetes, you can change the actual state of deployed containers as well as describe the desired state of new containers.

Self-monitoring

Kubernetes automatically restarts failed containers. If necessary, it replaces and terminates containers that fail a user-defined health check and disables access to them until they are ready for maintenance.

Storage orchestration

Kubernetes allows you to automatically mount the desired storage system, such as local storage, cloud provider, and more.

Automatic load balancing

Based on the clusters, nodes, specified CPU, and memory data for each container provided to Kubernetes. Kubernetes can place them on the nodes to make the most efficient use of resources.

Managing sensitive info and configuration

Passwords, tokens, and keys are examples of sensitive data that Kubernetes can store and handle. Without altering container images or revealing sensitive data, you can deploy and update sensitive data and application configuration as needed.

And the list goes on and on. Now, it is time to look at the architecture of Kubernetes.

What Does Kubernetes Consist of?

Here are some of the primary components of Kubernetes:

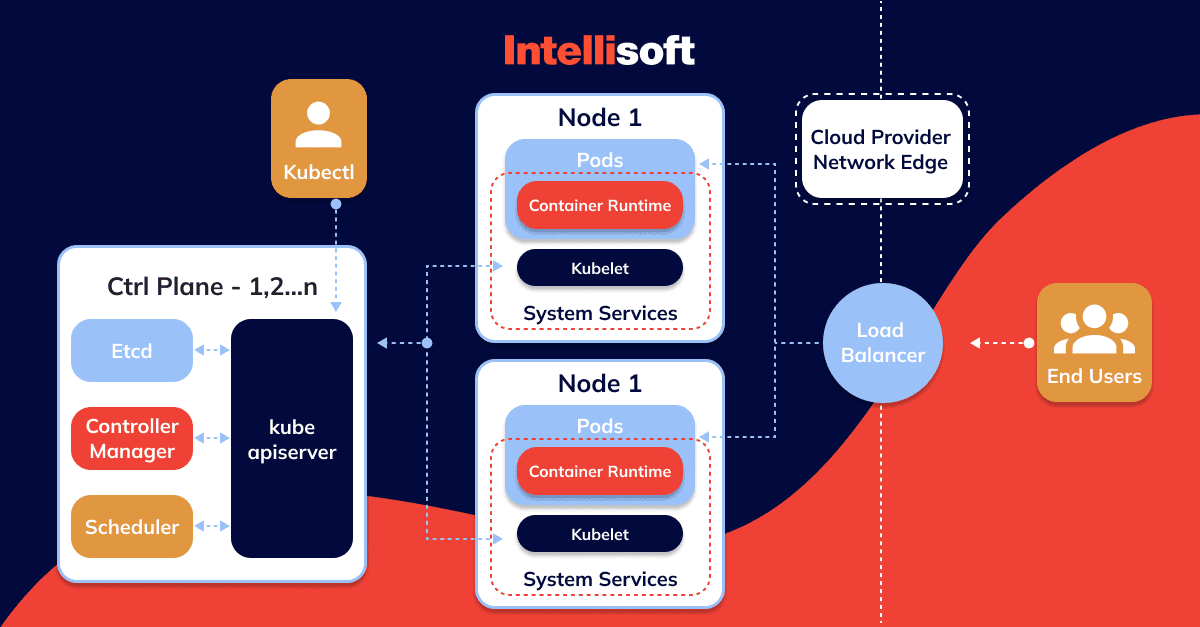

- Kubernetes master node

The access point (or control panel) from which the administrator and other users manage the cluster and the scheduler and deployment of containers is the Kubernetes master node. A cluster always has one master node, although the cluster replication scheme may allow for more.

In a persistent and distributed ectd key/value store, the master node stores state and configuration information for the entire cluster. Each node can access the ectd repository, and by doing so, nodes can learn how to

keep their containers’ configurations up to date. The Kubernetes master node or a standalone configuration can both execute the etcd repository.

- Nodes

All nodes in a Kubernetes cluster must be configured in a container runtime environment, typically Docker. Following their deployment by Kubernetes to cluster nodes, containers are run and managed by the container runtime environment. The containers house the running instances of your applications (web services, databases, API servers, etc.).

An agent process named Kubelet is running on each Kubernetes node and is in charge of controlling the node’s status (starting, halting, and maintaining application containers) by carrying out commands from the control panel. The Kubelet collects performance and operational state information from the nodes, pods and containers under its control and passes it to the control panel so that tasks can then be allocated.

- Labels

In Kubernetes, labels—key/value pairs—can be assigned to pods and other objects. Operators of Kubernetes can organize and choose subsets of objects using labels. For instance, labels make it easier to find the information you require when monitoring Kubernetes objects.

- Namespaces

Namespaces are created for multi-user setups where users are dispersed across many teams or projects and give you the ability to build virtual clusters on top of a physical cluster. Additionally, they logically segregate cluster resources and assign resource quotas.

- Helm

Helm is an application manager registry for Kubernetes, supported by the Cloud Computing Development Foundation. So-called Helm maps (charts) are preconfigured application software resources that users can download and deploy in your environment. DevOps teams will be able to manage applications in Kubernetes faster with Helm-ready maps that they can share, augment, and deploy in their development and productivity environments.

- Cluster-level logging

In order to retrieve and store application and system logs taken from the cluster and written to a standard output device with standard error codes, you can integrate your logging tool with Kubernetes. Keep in mind that Kubernetes does not provide space for storing logs, so you must choose your own logging solution if you want to use cluster-level logs.

- Domain name system (DNS)

Kubernetes provides a mechanism for discovering services between subdomains using DNS. This DNS server works in addition to any other DNS servers in your infrastructure

What Are Pods in Kubernetes?

In Kubernetes, a Pod is the smallest deployable unit of computing that can be created and managed. A Pod represents a single instance of a running process in a cluster. It encapsulates one or more containers (such as Docker containers), storage resources, a unique network IP, and options that govern how the containers should run.

Here are some key points about Pods in Kubernetes:

- Containers in a Pod: A Pod can contain one or more containers that share the same IP address, IPC namespace, and other resources. This grouping of co-located containers is convenient for managing applications that require tightly coupled components.

- Ephemeral: Pods are designed to be ephemeral and disposable. They do not survive if the node they run on fails. Instead, new Pods are created to handle workloads as needed.

- Pod Scheduling: Kubernetes schedules Pods to run on specific nodes based on resource requirements and constraints defined by the user.

- Pod Networking: Each Pod gets its own unique IP address within the cluster, allowing containers within the Pod to communicate with each other using

localhostand containers in other Pods using the Pod IP. - Pod Volumes: Pods can include volumes that allow data to persist beyond the lifecycle of a single container, enabling data sharing among containers within the Pod.

- Pod Lifecycle: Pods go through different phases in their lifecycle, including Pending, Running, Succeeded, Failed, and Unknown.

- Controllers: Pods are typically managed by higher-level controllers like Deployments, ReplicaSets, StatefulSets, etc., which ensure the desired number of Pod replicas are running at any given time.

Kubernetes Use Cases

In the section below, you’ll find most common examples of when to use Kubernetes:

Control of Access Based on Role

RBAC can be used to enable fine-grained access control, limiting access to only those users who are authorized. In order to set up read-only access for all new users who are added to your cluster, you can also configure permissions at the cluster level.

A strong security solution for Kubernetes clusters is RBAC. You can manage which users can access which resources and what they can do with them. This lessens the possibility of someone abusing your system or accidentally corrupting a crucial application or data set by making changes without permission, ensuring that people only have access when needed.

Development of Cloud-Native Apps

For those who want to use Kubernetes as a platform rather than just a container orchestrator, Kubernetes is a fantastic tool for creating cloud-native applications. On Kubernetes, app deployment and scaling are simple, something that is lacking in its predecessor Docker Swarm.

Due to its quick creation of production-ready containers, Kubernetes will be more frequently used by developers as their main platform or even their sole CI/CD solution in 2022-2023.

Serverless Architecture

As it enables companies to build code without worrying about the provisioning of the infrastructure, serverless architecture is swiftly gaining popularity. Only when a service is active does the cloud provider provision resources in this style of design. The serverless notion, however, is greatly hampered by vendor lock-in because code created for one cloud Kubernetes platform has problems running on another. Utilizing Kubernetes enables the creation of a vendor-neutral serverless platform by abstracting the underlying technology. An illustration of a serverless framework is Kubeless.

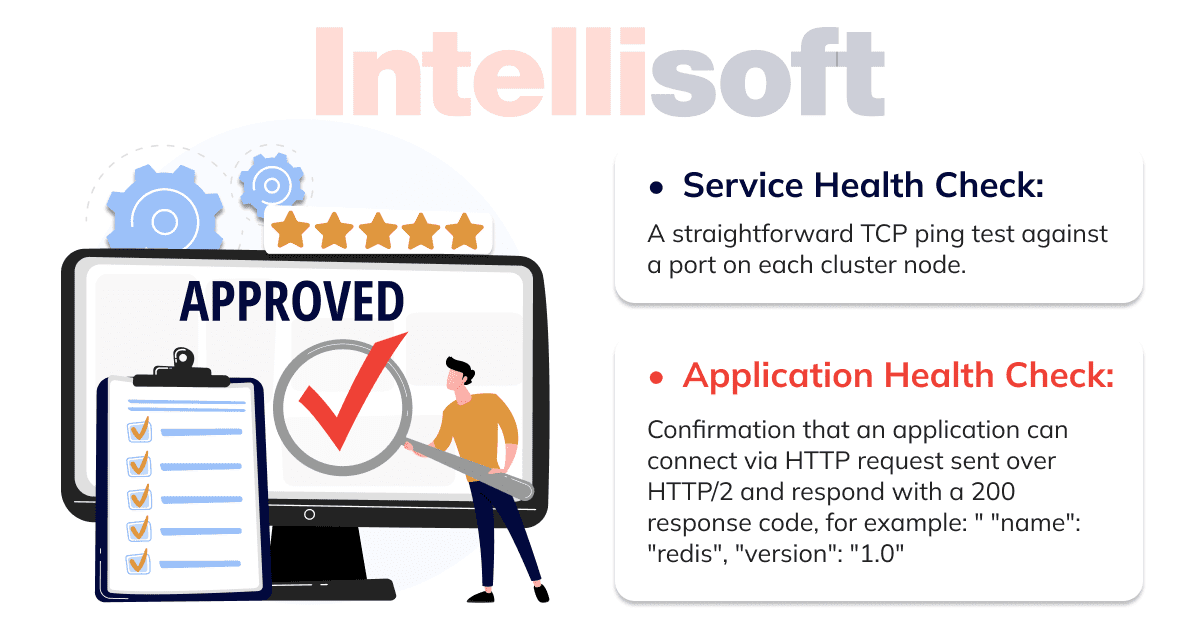

Updating Deployment Images and Monitoring Service Health

The ease with which applications may be developed and deployed is one of the reasons Kubernetes has become so popular. However, if you run numerous apps on a single cluster, you’ll experience multiple issues concurrently. Any successful DevOps process should include regular monitoring of your services to ensure their soundness. Checking their health or setting up alerts when something goes wrong can help, but how do you go about doing this? Here are a few instances:

Network Policy Administration

The process of setting up network access policies for applications operating on the cluster is known as network policy management. Network policies are set up as a collection of guidelines that specify which pods are allowed to communicate with one another, external services, etc.

Network policy management is essential to secure Kubernetes because it ensures that only necessary resources, such as databases and services, are reachable from outside the cluster. By doing this, you can guard against hostile actors who might try to access your service through unintentionally left-open listening ports or backdoors.

Deploying Containerized Applications

The Kubernetes container runtime, containerd, makes it simple to deploy and manage the containerized applications that run on Kubernetes. Google created this open-source container runtime for use with Kubernetes and other cloud computing platforms. You can run and deploy containers locally or on your own servers using it as a standalone tool as well.

Observation, Logging, and Metrics Collection

For Kubernetes, obtaining metrics, logging, and monitoring are crucial. They aid developers in understanding your cluster’s condition and resolving problems as they arise. As a result, one of Kubernetes’ most common use cases is monitoring.

Prometheus (open source), Stackdriver (from Google), Papertrail (open source), Datadog (paid), or Heapster/InfluxDB/Grafana (Kubernetes built-in solution) are a few examples of monitoring solutions. For log collecting, you can also utilize Fluentd, Logstash, or the ELK stack.

Scaling of Horizontal Pods

The process of expanding the number of containers in a pod is known as horizontal pod scaling. This is done to improve the capacity of an existing application, which can be accomplished by adding more clones.

When utilizing a cluster without horizontal scaling capabilities, managing the replica counts for pods would need to be done manually. Horizontal pod scaling employs the replication controller functionality of Kubernetes instead. Because of this, you can manage your workloads in Kubernetes without being concerned that they will ever grow or shrink too much.

Management of Secrets & Configuration

You’ve probably heard that Kubernetes support configuration management tools like Ansible and Puppet. In fact, it supports a number of them, including Helm, HashiCorp Vault (for managing secrets), SaltStack, and Terraform. If you are currently using another, you could quickly switch to Kubernetes’ built-in support for your preferred tool.

Not just HashiCorp Vault, but a variety of other secret management tools are supported by Kubernetes. They consist of Azure Key Vault and AWS Secrets Manager. You should seriously consider using Cloud Native Storage if you require these products but don’t want to forgo the provisioning flexibility provided by Kubernetes for your cloud-native applications or services, or simply need the quickest way to spin up an application on any cloud provider without worrying about secrets.

Kubernetes Trends to Watch in 2023

Kubernetes is widely used in container orchestration, and its appeal is still strong. However, this does not imply that development in the field of container orchestration has come to a complete halt. We suggest that you look at the recent Kubernetes trends.

AI/ML and Kubernetes Become a Powerful Couple

Perhaps, the most notable examples of Kubernetes’ maturity and capacity to handle use cases with increasing complexity are AI and machine learning (ML). AI/ML workloads are increasingly being served in production using Kubernetes. In the upcoming years, this Kubernetes trend will significantly impact business.

Nearly every element of contemporary business is being impacted by AI and ML, from bettering customer interactions to enhanced data decisions and things like modeling driverless vehicles.

The Cloud Migration Accelerating Shift

Cloud migration is not a brand-new idea. Actually, hybrid, multi-cloud, public, or private cloud services are already in use by 94% of businesses. Organizations also use containers to quicken the supply of software and broaden the flexibility of cloud migration.

As the amount of remote work rises, the cloud transition quickens. This increasing tendency is probably going to continue after this transition. In fact, Gartner anticipates that in 2022, businesses will spend over $1.3 trillion on cloud migration.

We can anticipate hyperscalers like Amazon, Microsoft, and Google to gradually release new tools to make the transition to container-native environments easier as people’s adoption of the cloud increases.

The Shift to DevSecOps

Significant security concerns are brought on by the shift to DevSecOps containers and Kubernetes. Companies understand they cannot adequately secure containerized environments if security is not incorporated into every phase of their development lifecycle. DevSecOps patterns are consequently becoming an integral component of contemporary containerized settings.

Here are some important conclusions from the most recent Red Hat State of Kubernetes Security study that support this trend:

- In the past 12 months, 94% of DevOps teams reported experiencing a security incident in a Kubernetes cluster.

- Due to security concerns, 55% of teams had to postpone Kubernetes production installations.

- Over the past 12 months, 60% of teams reported experiencing a misconfiguration issue.

- 15% of teams believe that developers are largely in charge of maintaining Kubernetes security.

Kubernetes Networking Trends Will Be Driven by IoT and Edge Computing

The administration and gathering of device data is changing as a result of edge computing. You may have decided to follow the majority of people and use Kubernetes as their go-to platform for future IoT and Edge applications.

However, the networking component is still being worked on. Service mesh applications require service mesh tools. However, they are neither portable, quick, or secure enough to function in the majority of Edge computing scenarios. K3s and MicroK8s are two examples of such lightweight implementations. These were created with IoT and Edge in mind. However, in general, networking needs to catch up with comparable variations.

Stateful Applications

Today, stateful applications are the new norm. While technology innovations like containers and microservices have simplified the development of cloud-based systems, their dynamic has made managing stateful processes more difficult.

Stateful apps must be executed in containers more and more frequently. Applications that are containerized can be deployed and operated more easily in a variety of complicated contexts, including edge, public cloud, and hybrid cloud. For continuous integration and delivery (CI/CD) to provide a seamless transition from development to production, maintaining state is equally crucial.

Kubernetes Talent

Is there anything more popular than Kubernetes itself? The answer is “Kubernetes skills.”

No one really wants to hear about a “talent shortage,” but for the foreseeable future, there will be a burning demand for cloud-native skills in general and Kubernetes specific skills in particular. In 2022, demand is once again exceeding supply.

According to Clyde Seepersad, SVP and GM of training and certification at The Linux Foundation, Kubernetes adoption, as well as cloud-native technologies in general, “is not showing any signs of slowing down.” He anticipates that more businesses will continue to migrate to the cloud and make greater use of serverless, microservices, and other cloud-native technologies. The fact that Kubernetes, Linux, and DevOps play such an important role in organizations is what Mr. Seepersad anticipates most.

Yes, in the coming year, we’ll hear more complaints about a lack of accessible, reasonably priced Kubernetes talent. Additionally, there will be more focused, innovative efforts made to develop Kubernetes expertise and related skills.

Security Will Remain a Top Priority

If you correctly adjust the appropriate settings, Kubernetes has important security features built-in. The platform’s security has also received a lot of attention from its thriving ecosystem, as evidenced by the fact that OperatorHub.io currently hosts 29 different security apps.

In 2023, businesses will hone their cloud and cloud-native security strategies using the tools and services at their disposal. Red Hat’s Kirsten Newcomer, director of cloud and DevSecOps strategy, predicts that organizations will alter the way they gate applications at deployment time, for instance.

What is the future of Kubernetes? We expect to see continued community investment in Kubernetes security generally, especially as it relates to teams’ ability to manage their clusters more cost-effectively by integrating security into the tools they use.

Verdict: Your Own or Someone Else’s Platform?

Everything depends on your preference for building (maintaining) the platform yourself or leaving it in the hands of a contractor. VMware has noticed a decline in the number of independently created and maintained Kubernetes clusters.

And explaining it is simple. Companies realize that maintaining DIY clusters requires a lot of time and resources, downtime is costly, and one or two experts are not enough to manage a dozen clusters with hundreds of containers. As a result, they choose a third-party platform, saving time and other resources while ensuring that their applications are always running.

Kubernetes is a relatively new system that is unquestionably not simple. Therefore, it is preferable to select the solution that best fits the company’s resources rather than the cheapest one. This also holds true for the selection of the architecture: if you plan to develop and host your applications in the cloud, they should be designed for Kubernetes right away.

IntelliSoft is a DevOps service provider, aware of Kubernetes usages, best practices, and current Kubernetes trends. Thus, you can always let us know whether you need help with a related project and get immediate consultation and assistance!