Imagine your application soaring in popularity, with users flooding in from around the globe. It’s exciting – until your servers start groaning under the pressure, frustrating users with sluggish performance or timeouts. This is when efficient traffic management becomes essential, and the unsung hero of your application’s stability and performance enters the scene; a Docker load balancer.

A Docker load balancer ensures that traffic is evenly distributed across multiple containers, preventing any single server from becoming a bottleneck. Whether you’re scaling an app or managing microservices, the ability to control and direct traffic is crucial to keeping everything running smoothly.

At IntelliSoft, with over 15 years of experience in IT solutions and cloud development, we’ve seen firsthand how the proper use of balancing can be the difference between a seamless user experience and one riddled with downtime. As businesses scale, the complexity of managing containerized applications grows – having a reliable traffic distribution strategy is no longer optional, but vital for success.

In this guide, we’ll dive deep into mastering the Docker compose load balancer, helping you understand how to optimize your traffic flow, prevent overloads, and ensure that your app continues performing, no matter how much traffic.

Table of Contents

What is Load Balancing in the Age of Docker?

A balancer is a critical component in modern application architectures, efficiently distributing incoming traffic across multiple servers or containers.

Its primary purpose is to prevent any single server from becoming overwhelmed by traffic, ensuring smooth and reliable performance.

In Docker compose load balancer deployments, where applications often run in containerized environments, load balancers act as gatekeepers, intelligently managing traffic to optimize resource utilization and avoid performance bottlenecks.

By distributing workloads, balancers help maintain application availability, allowing businesses to scale their operations without compromising speed or reliability. This is especially crucial in environments where microservices are deployed, as balancing ensures seamless communication between these services.

Core Functions of a Load Balancer

Traffic Distribution

The main task of a load balancer Docker is to distribute incoming traffic evenly across multiple containers or servers. This helps avoid situations where one server bears the entire load, leading to slowdowns or potential crashes. By routing traffic to the most available or least busy container, a load balancer keeps your application responsive and scalable, no matter how much traffic it encounters.

Ensuring Availability and Reliability

A load balancer plays a key role in ensuring your application’s high availability. It constantly monitors the health of each container and redirects traffic away from any that may be failing or underperforming. This action reduces the risk of downtime and keeps your application accessible, even if one or more containers experience issues.

Session Persistence and Sticky Sessions

Some applications require that users are directed to the same server or container throughout their session. This is where session persistence, or sticky sessions, come into play. A balancer can track user sessions and ensure that subsequent requests are routed to the same container, enhancing the user experience for applications that rely on session data, such as e-commerce sites or login-based services.

Types of Load Balancers

Hardware-Based Load Balancers

Hardware-based load balancers are physical devices dedicated to traffic management. They are often found in large-scale, enterprise-level environments where high performance and security are critical. While these balancers offer robust performance, they come with a higher price tag and require specialized setup and maintenance.

Software-Based Load Balancers

In contrast, software-based load balancers, often used in Docker environments, are more flexible and cost-effective. They run on general-purpose servers and can be easily integrated with containerized applications. These balancers are ideal for cloud-based or hybrid environments where scalability, flexibility, and automation are key priorities.

Benefits of Load Balancing in Docker Deployments

Improved Scalability

Load balancers make it easier to scale Docker deployments by dynamically distributing traffic to containers as they are added or removed. This flexibility allows you to handle increased traffic volumes without the need for manual intervention or downtime.

High Availability

By monitoring the health of each container, load balancers ensure that traffic is always directed to healthy instances, reducing the likelihood of downtime and keeping your application available to users at all times.

Optimal Resource Utilization

With balancing in place, resources are used more efficiently. Traffic is distributed based on container availability, preventing overloading and ensuring that no single container is underutilized or overwhelmed.

Efficient Microservices Communication

In Docker environments where microservices architecture is common, balancers play a pivotal role in ensuring smooth communication between services. They help direct traffic between services, optimize internal operations, and improve the overall performance of your application.

Types of Load Balancing in Docker

In Docker deployments, load balancing can be categorized into two main types; internal balancing and external load balancing. Each plays a distinct role in managing traffic, ensuring the smooth operation of services running within a Dockerized infrastructure.

Internal Load Balancing

Internal load balancing focuses on managing traffic within the Docker environment, ensuring efficient communication between containers and services. This type of balancing is particularly crucial in Docker Swarm load balancer or Kubernetes deployments, where multiple containers need to work together seamlessly.

Managing Traffic within the Docker Swarm

In a NGINX load balancer Docker Swarm environment, internal load balancers distribute traffic across different nodes and containers. This option ensures that all services get the resources they need while preventing overload on any particular node. The swarm manager, responsible for orchestrating services, uses internal load balancers to dynamically distribute incoming requests to available containers based on factors like health status and workload.

How Internal Load Balancers Work Across Services

Internal balancers operate behind the scenes to handle traffic across microservices within the Docker environment. When a service is replicated across multiple containers, the internal load balancer directs requests to these containers, ensuring equal distribution of traffic. This is particularly important for stateless services where requests don’t rely on previous sessions and can be directed to any available instance.

External Load Balancing

External load balancing, on the other hand, manages traffic outside the Docker environment, such as users accessing a web application.

In this scenario, Docker integrates with external balancers, which help route traffic to the appropriate containers running within the Docker infrastructure. External load balancing ensures that incoming traffic is efficiently distributed to the right containers, providing stability and preventing any container from overloading.

Distributing Traffic from External Sources

External balancers route incoming requests from users or external services to the right Docker containers. This distribution balances traffic and maintains the application’s performance under heavy loads by dynamically adjusting traffic flow based on container health and capacity.

Integrating with External Load Balancers

Docker integrates seamlessly with popular external balancers like NGINX docker load balancer, HAProxy load balancer Docker, and cloud-native solutions such as AWS Elastic Load Balancing, Azure Load Balancer, and Google Cloud Load Balancing. These solutions provide advanced traffic management features, including session persistence, failure detection, and automatic traffic rerouting.

NGINX

NGINX load balancer Docker is a widely-used, high-performance web server and reverse proxy that excels in load balancing. Docker NGINX load balancer can be easily integrated with Docker to manage traffic distribution, providing efficient balancing for HTTP, HTTPS, and TCP traffic. NGINX load balancer Docker supports advanced features such as SSL termination, caching, and session persistence, making it an excellent choice for external load balancing in Docker environments.

HAProxy

HAProxy is another popular external load balancer known for its reliability and scalability. It efficiently distributes traffic across containers and ensures the availability of services even during high-traffic periods. HAProxy supports layer 4 (TCP) and layer 7 (HTTP) balancing and provides features like traffic monitoring, SSL termination, and health checks to detect failed containers. Docker environments benefit from HAProxy’s robust ability to manage both internal and external traffic.

Cloud-Native Load Balancers

Docker also integrates effortlessly with cloud-native load-balancing solutions offered by major cloud providers:

- AWS Elastic Load Balancing (ELB). AWS ELB automatically distributes incoming traffic across multiple Amazon EC2 instances, containers, or microservices running in Docker. It ensures high availability by rerouting traffic away from unhealthy instances.

- Azure Load Balancer. Microsoft’s Azure Load Balancer distributes traffic across Docker containers in Azure’s cloud infrastructure. It ensures service reliability, scales based on demand, and offers features like automatic failover and session persistence.

- Google Cloud Load Balancing. Google Cloud’s load balancing service provides scalable traffic distribution across multiple Docker containers running in Google Cloud. It offers advanced routing features, global load balancing, and built-in auto-scaling for handling traffic spikes.

These cloud-native solutions simplify external balancing by offering out-of-the-box integrations, monitoring, and scaling features, making them ideal for Docker deployments running in cloud environments.

Related Readings:

- Docker and Microservices: The Future of Scalable and Resilient Application Development

- Docker 101: Stop, Delete, and Manage Your Containers Like a Pro

- AWS vs. Azure vs. Google Cloud: Comparison, Benefits, and Use Cases

- OpenShift vs Kubernetes: Which Suits You Best?

- The Great Cloud Storage Debate: ownCloud vs Nextcloud

Docker Native Load Balancing Options

Docker provides native load-balancing capabilities that help manage traffic distribution within containerized environments. These native solutions are beneficial when deploying applications with Docker Swarm Mode, allowing traffic to be efficiently balanced between multiple services and containers.

Docker Swarm Mode Load Balancer

Docker Swarm Mode comes with a built-in balancer that simplifies the process of distributing traffic across services within the Swarm. This allows Docker to automatically handle traffic routing between containers, without the need for external tools or configurations.

Explanation of Docker Swarm Mode’s Built-in Load Balancer

In Docker Swarm Mode, each service you deploy is assigned a “virtual IP” (VIP), which the built-in load balancer uses to distribute traffic across the service’s containers. This means that any incoming traffic directed at the service’s VIP is automatically balanced across all replicas of that service. The balancer constantly monitors container health and routes requests to healthy containers, ensuring that services remain available and responsive even during failures.

How Service Discovery Works in Docker Swarm

Service discovery is a key feature of Docker Swarm’s load balancing system. It allows services within the Swarm to communicate with one another using simple DNS names, without needing to know the specific IP addresses of containers. When a service is deployed, Swarm automatically registers it with an internal DNS service, making it easy to find and communicate with other services. This eliminates the need for complex network configurations and allows seamless scaling, as new replicas are automatically added to the balancing pool.

Benefits and Limitations of Docker Swarm’s Native Load Balancing

Benefits

- Simplified traffic management. Docker’s native load balancer automatically distributes traffic across all service replicas, reducing the need for manual intervention.

- Service discovery. Built-in service discovery enables easy communication between services using DNS names, removing the complexity of managing IP addresses.

- Health monitoring. The balancer continuously checks the health of containers and directs traffic to healthy instances, ensuring availability.

- Integrated with Docker Swarm. Native integration with Docker Swarm eliminates the need for third-party load balancing solutions, simplifying the infrastructure.

Limitations

- Limited advanced features. While Docker’s native balancer is sufficient for most use cases, it lacks some of the advanced traffic management features offered by external solutions like NGINX or HAProxy (e.g., advanced session persistence and custom routing).

- Scaling challenges. As traffic increases, Docker Swarm’s native load balancing may struggle to handle very large-scale deployments effectively, requiring external solutions to ensure performance.

- Single point of failure. Without additional configurations, the built-in balancer can become a single point of failure if not properly distributed across multiple nodes.

Ingress and Overlay Networks

Docker Swarm uses two key network types to manage traffic and enable communication between services: ingress and overlay networks.

- Ingress Network. The ingress network handles incoming traffic from outside the Swarm, routing it to the appropriate service. This network is automatically created when you deploy a service that requires external access. The ingress network works in tandem with Docker’s built-in load balancer to distribute incoming traffic to available containers.

- Overlay Network. The overlay network enables communication between services running on different Swarm nodes. It is crucial for internal balancing, allowing services in different containers and hosts to communicate seamlessly. Docker’s load balancer uses the overlay network to route traffic between service replicas across different nodes.

How Overlay Networks Handle Load Balancing in Docker Swarm

When you deploy services across multiple nodes in a Docker Swarm, the overlay network comes into play. Each node in the Swarm can have containers running instances of the same service. The overlay network abstracts the underlying network complexity, creating a virtual network that spans all nodes. When traffic comes in, Docker Swarm’s load balancer uses the overlay network to distribute requests to the appropriate service replicas, regardless of where they are running in the Swarm.

Overlay networks also ensure that all service replicas can communicate with each other efficiently, even if they are on different physical machines. This network abstraction allows the Swarm to scale services without requiring complex networking setups.

Example of a Simple Docker Swarm Service Load Balancing Setup

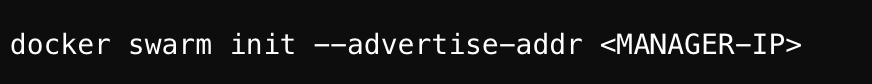

Here’s a step-by-step example of how to set up a basic Docker Swarm balancing scenario:

1. Create a Docker Swarm Cluster

First, you need to create a Docker Swarm cluster. On the first machine (manager node), initialize the Swarm:

Then, on additional machines (worker nodes), join the cluster by using the command provided by the swarm init output:

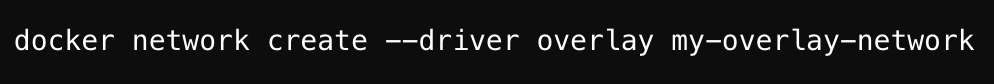

2. Create an Overlay Network

Once the cluster is set up, you need to create an overlay network that spans all nodes in the Swarm. This network will handle internal service communication and load balancing:

3. Deploy a Service

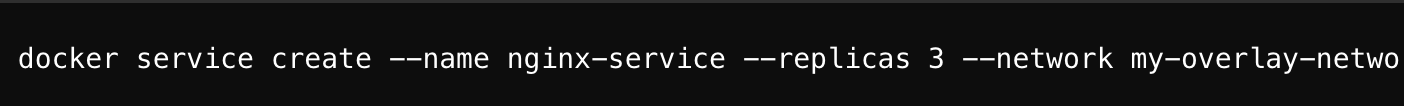

Now, you can deploy a service that will use the overlay network. For example, let’s deploy a simple NGINX service with multiple replicas:

4. Test Load Balancing

Finally, test the load balancing by sending requests to the service. Since Docker’s built-in load balancer is in place, it will automatically distribute requests across the three NGINX replicas:

Repeat the curl command multiple times, and you should see that the requests are distributed evenly across the NGINX replicas. You can also scale the service up or down, and Docker will adjust the load balancing accordingly:

With these steps, you’ve created a Docker Swarm cluster, set up load balancing using an overlay network, and deployed a service that automatically distributes traffic across multiple replicas.

Third-Party Load Balancers for Docker

While Docker’s native load balancing is effective for many use cases, third-party load balancers offer advanced features and flexibility. These solutions enhance traffic management, optimize performance, and ensure high availability at a larger scale. Below is an overview of popular load balancers that integrate seamlessly with Docker.

NGINX

NGINX is a widely-used web server that also functions as a reverse proxy and balancer, making it ideal for handling high-traffic Docker environments.

How NGINX Integrates with Docker for Load Balancing

- Reverse Proxy Setup. NGINX can act as a reverse proxy, directing incoming traffic to containers based on predefined rules.

- Docker Integration. It works with Docker by using configurations to dynamically register containers and services. You can run NGINX in a container, ensuring seamless interaction with your Dockerized environment.

- Health Checks. NGINX regularly monitors the health of containers and reroutes traffic if a container fails.

- SSL Termination. It manages SSL/TLS certificates to handle secure communication with clients, offloading that responsibility from backend services.

Key Benefits of Using NGINX

- Flexible Configuration. Supports complex routing rules, headers, and caching strategies.

- High Performance. Optimized for speed and efficiency, handling large volumes of concurrent connections.

- Built-in Security. Provides DDoS protection, request filtering, and rate limiting.

- Open-Source and Commercial Versions. The open-source version is sufficient for many use cases, while NGINX Plus offers advanced features like active health checks and real-time monitoring.

HAProxy

HAProxy is a reliable and fast balancer known for its robust performance in high-traffic environments. It excels at load balancing and failover for Docker-based services.

Overview of HAProxy’s Role in Docker Environments

- Dynamic Service Discovery. HAProxy integrates with Docker by automatically detecting new containers and updating routing tables.

- Traffic Distribution Algorithms. Supports various algorithms (round-robin, least connections, etc.) to efficiently distribute traffic among containers.

- SSL Offloading. HAProxy offloads SSL processing from backend services, improving performance.

- Health Monitoring. Regularly checks the status of containers to ensure only healthy instances receive traffic.

Key Benefits of Using HAProxy

- Lightweight and Fast. Low resource consumption makes HAProxy ideal for high-performance environments.

- Advanced Routing Rules. Offers extensive configuration for routing based on headers, cookies, or URLs.

- Highly Scalable. Can efficiently handle millions of requests per second.

- Active Community and Support. Backed by strong community and enterprise support.

Traefik

Traefik is a modern, cloud-native reverse proxy and load balancer designed to work seamlessly with containerized environments, including Docker and Docker Kubernetes load balancer.

Modern Reverse Proxy and Balancer for Docker

- Automatic Service Discovery. Traefik automatically discovers Docker services and configures routing rules in real time.

- Dynamic Configuration. Adapts to changes in the environment without requiring manual updates or reloads.

- Built-in Let’s Encrypt Integration. Automates the process of generating and renewing SSL certificates for secure communication.

- Dashboard and Metrics. Provides an interactive dashboard for monitoring traffic and container health.

Key Advantages of Using Traefik

- Ideal for Microservices. Designed specifically for dynamic, cloud-native, and microservice architectures.

- Simple Configuration. Minimal setup with dynamic routing for Docker containers.

- Supports Multiple Backends. Works with Docker Swarm, Kubernetes, and other orchestration platforms.

- Cloud-Ready. Built with native support for cloud environments, making it easy to scale and deploy across clouds.

Comparison of Third-Party Solutions

| Feature | NGINX | HAProxy | Traefik |

|---|---|---|---|

| Traffic Handling | HTTP, HTTPS, WebSocket, gRPC | HTTP, HTTPS, WebSocket | HTTP, HTTPS, WebSocket, gRPC |

| Load Balancing Algorithms | Round-robin, Least connections, IP-hash | Round-robin, Least connections, Source IP hash | Dynamic based on service discovery |

| SSL Termination | Yes | Yes | Yes |

| Sticky Sessions | Via custom configuration | Yes | Yes |

| Service Discovery | Manual or with DNS-based discovery | Manual or with DNS-based discovery | Automatic via Docker labels |

| Performance | High-performance, suitable for large-scale environments | Highly reliable, great for large-scale applications | Built for dynamic environments with automatic updates |

| Best for | Highly customizable, enterprise-grade use cases | High-availability, advanced features, large-scale setups | Agile environments with frequent changes and auto-scaling |

| Ease of Setup | Moderate, manual configuration | Moderate, requires manual configuration | Easy, auto-configures with Docker labels |

Implementing Load Balancing in Docker: Step-by-Step Guide

Setting Up a Simple Load Balancer Docker Compose

1. Install Docker Compose (if not already installed).

2. Create a Docker Compose File. In your project directory, create a docker-compose.yml file. In this file, define multiple services and a balancer, such as NGINX:

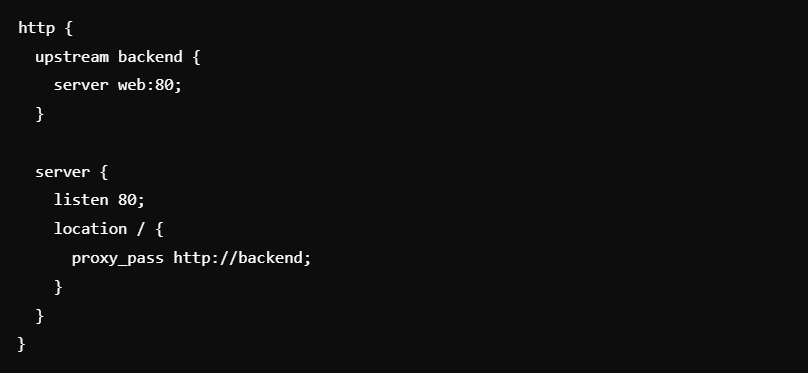

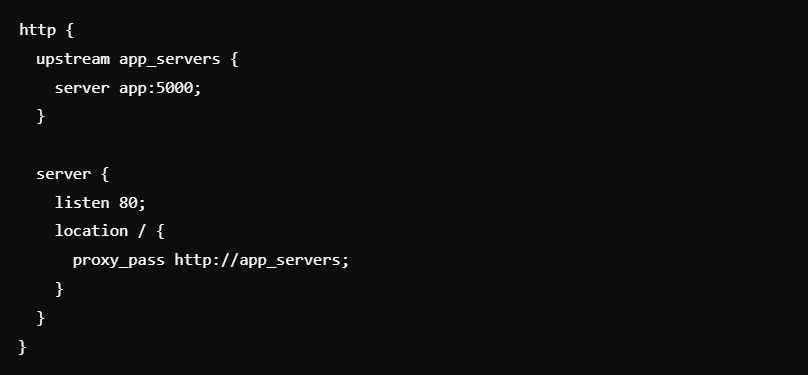

3. Configure NGINX Load Balancer. In the same directory, create an nginx.conf file to define NGINX as the load balancer:

4. Start the Docker Compose Stack. Run the following command to bring up the stack:

This command will start three replicas of the web service and an NGINX container to load balance the requests across the replicas.

Configuring Docker Services for Load Balancing

In Docker, services can be configured for balancing either through Docker Swarm or using third-party balancers like NGINX, HAProxy, or Traefik. When using Docker Compose for orchestration, you can configure services to be balanced by adding multiple replicas and defining a balancer in the configuration.

1. Define Service Replicas. In the docker-compose.yml file, specify the number of replicas for a service under the deploy section:

This configuration ensures that the service is replicated across multiple containers.

2. Expose Service Ports. Ensure that each service is exposed on a port that the load balancer can forward traffic to:

Managing Multiple Containers With a Load Balancer

Managing multiple containers with a balancer ensures that traffic is distributed evenly among services, and balancing can scale dynamically as containers are added or removed.

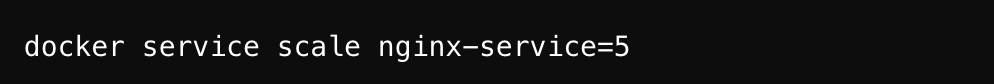

1. Scaling Services. You can dynamically scale services by increasing the number of replicas:

This command scales the web service to five containers, and the balancer automatically distributes traffic across all replicas.

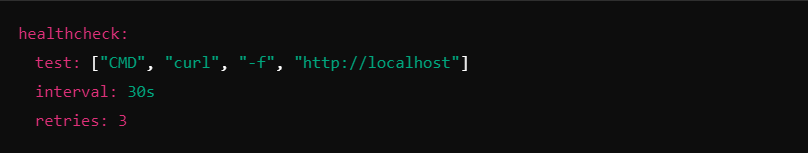

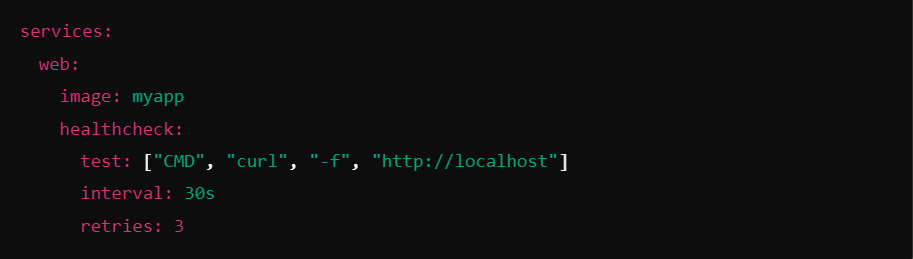

2. Monitoring Container Health. Load balancers often rely on health checks to route traffic only to healthy containers. Configure health checks in the docker-compose.yml file to ensure faulty containers don’t receive traffic:

Example: Setting Up NGINX as a Load Balancer for Docker Containers

1. Create the Docker Compose File

2. Configure NGINX. In the nginx.conf file, define the balancing rules:

3. Start the Services. Use Docker Compose to start the services and the NGINX load balancer:

4. Test Load Balancing. Access localhost:8080 in your browser or use curl to send requests to the NGINX load balancer:

Monitoring and Managing Traffic Through Load Balancers

Monitoring traffic through balancers is critical for optimizing performance and ensuring that services are evenly distributed across containers. Here’s how you can monitor and manage traffic effectively:

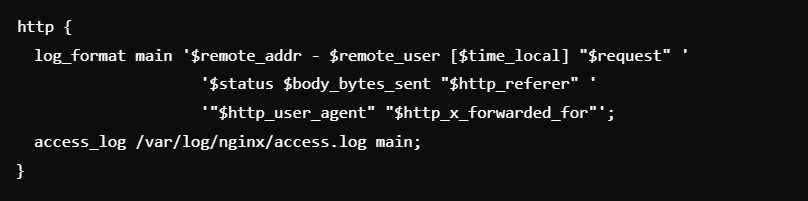

1. Monitor Traffic with NGINX. NGINX provides a variety of logging options to monitor traffic. You can configure access logs in the nginx.conf file:

2. Using Monitoring Tools. Use Docker monitoring tools like Prometheus, Grafana, or cAdvisor to visualize traffic flow and balancer performance. These tools allow you to track metrics such as:

- Request rate. The number of requests handled by the balancer per second.

- Response times. How long it takes for a request to be processed.

- Container health. Monitoring which containers are up and running.

3. Managing Load Balancers with Docker. Docker’s built-in CLI commands allow you to scale services, restart containers, and monitor logs directly from the terminal:

Best Practices for Docker Load Balancing

Effective balancing in Docker environments requires more than just traffic distribution – it demands careful planning for reliability, scalability, and resilience. Below are some essential practices to ensure optimal performance for Docker-based services.

Ensuring High Availability Through Load Balancers

High availability ensures that your services remain accessible, even during unexpected disruptions. This can be achieved through a multi-layered load-balancing strategy.

- Distribute Traffic Across Multiple Nodes. Use both internal (within Docker Swarm) and external load balancers to evenly distribute workloads across different nodes and containers.

- Deploy Redundant Load Balancers. Implement multiple balancers in an active-passive or active-active setup to prevent single points of failure.

- Leverage DNS Failover. Use DNS-based balancing to reroute traffic to healthy instances in case of a node failure or downtime.

- Global Server Load Balancing (GSLB). If you are running Docker clusters across multiple regions, a GSLB ensures that traffic is routed to the nearest and most responsive instance.

Auto-Scaling Based on Load

Auto-scaling ensures that your services can dynamically respond to changing workloads by adding or removing containers. This practice avoids resource bottlenecks and keeps your system efficient.

- Horizontal Scaling. Add more container instances when demand increases. Docker Swarm, Kubernetes, and cloud-native balancers like AWS and Azure offer auto-scaling capabilities.

- Set Resource Limits. Define CPU and memory thresholds to trigger scaling events automatically when the load surpasses predefined limits.

- Monitor Traffic Patterns. Use monitoring tools to track peak traffic times and preemptively scale up to prevent downtime.

- Integrate with Cloud Autoscalers. Leverage cloud-native auto-scaling tools (e.g., AWS Auto Scaling or Azure Autoscale) to manage large-scale containerized deployments seamlessly.

Setting Up Health Checks for Services

Health checks ensure that only functional containers receive traffic, boosting reliability and minimizing downtime.

- Active Health Checks. Load balancers like NGINX, HAProxy, and Traefik regularly ping containers to verify they are responsive and healthy.

- Passive Health Checks. Detect container failures through failed connection attempts or timeouts, then stop directing traffic to the faulty service.

- Docker’s Native Health Checks. Use Docker’s HEALTHCHECK instruction to monitor container health and restart or remove unhealthy instances.

- Implement Graceful Shutdowns. Ensure that balancers redirect traffic before taking containers offline for maintenance to avoid dropped requests.

Here are some examples:

Handling Failover and Redundancy

Failover strategies ensure uninterrupted service by switching to a backup system when primary components fail. Redundancy minimizes risk by having standby components ready to take over at any time.

- Active-Passive Failover. Configure a secondary balancer to become active only when the primary one fails, ensuring smooth handover.

- Active-Active Load Balancing. Distribute traffic evenly between multiple balancers, so if one fails, others take over seamlessly.

- Clustered Environments. Deploy your Docker services across multiple nodes in a cluster to ensure redundancy and continuous service availability.

- Session Persistence in Failover. Use sticky sessions to maintain user sessions during failover to prevent interruptions in user experience.

Monitoring and Performance Optimization

Effective monitoring and performance optimization are essential to maintain smooth operations in Docker environments. This section covers tools for monitoring Docker load balancer, key metrics to track, and practical strategies for optimizing performance under heavy loads.

Tools for Monitoring Docker Load Balancer

A robust monitoring setup ensures that your load balancers and containers are performing efficiently. Below are some popular tools for tracking metrics and diagnosing performance issues.

- Prometheus. A powerful open-source monitoring and alerting toolkit that collects time-series data and integrates well with Docker environments. Prometheus can monitor request rates, container health, and resource utilization.

- Grafana. Grafana works seamlessly with Prometheus, providing interactive dashboards and visualizations. It offers real-time insights into system performance, helping you identify bottlenecks.

- cAdvisor. Built specifically for Docker, cAdvisor monitors container-level metrics such as CPU, memory, and network usage, providing deep insights into individual container health.

- ELK Stack (Elasticsearch, Logstash, and Kibana). The ELK stack is ideal for centralizing and analyzing logs from Docker containers and load balancers. Elasticsearch indexes the data, Logstash processes it, and Kibana visualizes performance trends and anomalies.

Metrics to Track for Effective Load Balancing

Tracking key performance metrics allows you to detect potential issues before they impact your services. Here are the metrics you should monitor regularly:

- Request Rate (Requests per Second). Measures how many requests your load balancers are handling. Spikes in request rate may indicate a need for scaling.

- Latency (Response Time). Tracks the time taken to process requests. High latency can signal overloaded containers or inefficient balancing.

- Error Rates (HTTP 4xx/5xx Status Codes). Monitoring error rates helps detect failed requests or service disruptions. A rise in 5xx errors often points to backend issues, while 4xx errors may indicate client-side problems.

- CPU and Memory Utilization. Ensures that your containers and balancers are operating within optimal resource limits. Sudden spikes might require scaling to prevent slowdowns.

- Active Connections. Tracks the number of open connections handled by the load balancer at a given time. High connection counts may require adjustment to the load balancer configuration or scaling policies.

- Health Check Status. Keeps track of the health of individual containers. If containers fail health checks, they are taken out of rotation to prevent service degradation.

Tips to Optimize Performance in High-Traffic Environments

Performance optimization strategies ensure your Docker deployment performs reliably, even under heavy workloads. Here are some best practices:

- Implement Horizontal Scaling. Scale your services by adding more container instances rather than increasing individual container capacity. This approach improves fault tolerance and resource distribution.

- Use Caching. Reduce load on backend containers by caching frequently accessed data or content at the load balancer. Tools such as Redis or Varnish Cache can be used alongside load balancers.

- Optimize Load Balancer Configuration. Tune your balancer by selecting the most appropriate algorithms (e.g., round-robin, least connections) and setting appropriate timeouts to avoid bottlenecks.

- Implement Sticky Sessions. Sticky sessions (session persistence) ensure that requests from the same client are directed to the same backend container. This is crucial for applications requiring consistent session data.

- Monitor and Adjust Resource Limits. Define limits on CPU, memory, and network resources for containers to prevent them from hogging resources or causing throttling issues.

- Enable SSL Termination at the Load Balancer. Offload the SSL/TLS encryption process to the load balancer to reduce overhead on backend containers and improve performance.

Conclusion

At IntelliSoft, we’ve seen first-hand how the right balancing strategy can make or break a containerized deployment.

Docker balancers aren’t just traffic managers – they are the foundation that ensures your applications run smoothly, scale seamlessly, and stay available even when unexpected challenges arise.

Over our 15 years of experience, we’ve helped businesses fine-tune their infrastructures, combining internal and external load balancing solutions with the best monitoring tools to keep everything running at peak performance.

The secret to sustainable growth lies in staying ahead of traffic demands. Whether it’s choosing the right load balancer – NGINX, HAProxy, or Traefik – or implementing scaling strategies to handle spikes, there’s no one-size-fits-all solution. It takes a mix of tools, expertise, and continuous monitoring to get it right. And that’s exactly where we come in.

At IntelliSoft, we don’t just deploy containers – we create resilient, scalable systems designed to grow with your business. If you’re looking to master the art of traffic distribution and ensure your services are future-proof, let’s work together to build the infrastructure you need to succeed.

We’re ready to bring the insights and expertise that come from a decade and a half of innovation. Contact us, and let’s make your Docker deployments more than just containers; let’s turn them into the backbone of your success.